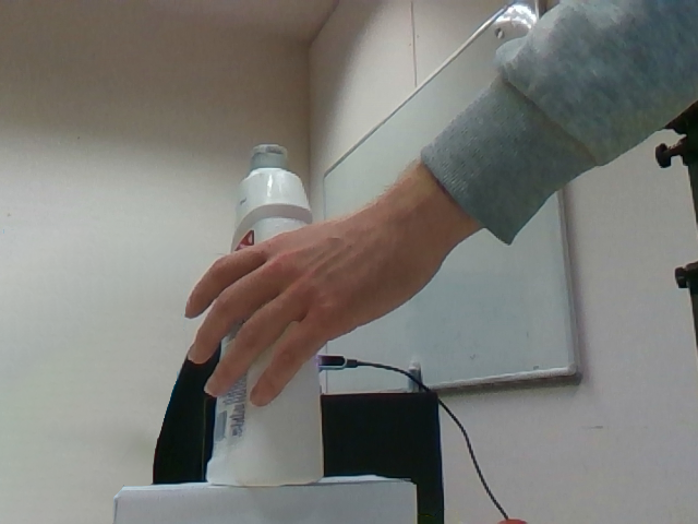

Different Objects (Seen),

Same Text Prompt

"Pick up the <object> and offhand it to the other hand."

Generating natural hand-object interactions in 3D is challenging as the resulting hand and object motions are expected to be physically plausible and semantically meaningful. Furthermore, generalization to unseen objects is hindered by the limited scale of available hand-object interaction datasets. In this paper, we propose a novel method, dubbed DiffH2O, which can synthesize realistic, one or two-handed object interactions from provided text prompts and geometry of the object. The method introduces three techniques that enable effective learning from limited data. First, we decompose the task into a grasping stage and an text-based manipulation stage and use separate diffusion models for each. In the grasping stage, the model only generates hand motions, whereas in the manipulation phase both hand and object poses are synthesized. Second, we propose a compact representation that tightly couples hand and object poses and helps in generating realistic hand-object interactions. Third, we propose two different guidance schemes to allow more control of the generated motions: grasp guidance and detailed textual guidance. Grasp guidance takes a single target grasping pose and guides the diffusion model to reach this grasp at the end of the grasping stage, which provides control over the grasping pose. Given a grasping motion from this stage, multiple different actions can be prompted in the manipulation phase. For the textual guidance, we contribute comprehensive text descriptions to the GRAB dataset and show that they enable our method to have more fine-grained control over hand-object interactions. Our quantitative and qualitative evaluation demonstrates that the proposed method outperforms baseline methods and leads to natural hand-object motions. Moreover, we demonstrate the practicality of our framework by utilizing a hand pose estimate from an off-the-shelf pose estimator for guidance, and then sampling multiple different actions in the manipulation stage.

We provide carefully annotated text descriptions for the GRAB dataset (Taheri et. al, 2020).

"Pick up the <object> and offhand it to the other hand."

"Pick up the <object> and offhand it to the other hand."

"Pick up the wineglass and drink from it."

“Pick up the wineglass and drink from it.”

“Take the wineglass and make a toast.”

“Get the wineglass and pass it to someone else.”

"Inspect the apple."

"Inspect the apple."

"Pass the piggybank."

"Pass the piggybank."

"Pass the bleach."

"Drink from the bleach."

"Pick up and put down the bleach."

"Pass the canned meat."

"Pick up and put down the canned meat."

@inproceedings{christen2024diffh2o,

title={DiffH2O: Diffusion-based synthesis of hand-object interactions from textual descriptions},

author={Christen, Sammy and Hampali, Shreyas and Sener, Fadime and Remelli, Edoardo and Hodan, Tomas and Sauser, Eric and Ma, Shugao and Tekin, Bugra},

booktitle={SIGGRAPH Asia 2024 Conference Papers},

year={2024}

}